Top Feedback Metrics for AI Training Success

NWA AI Team

Editor

Top Feedback Metrics for AI Training Success

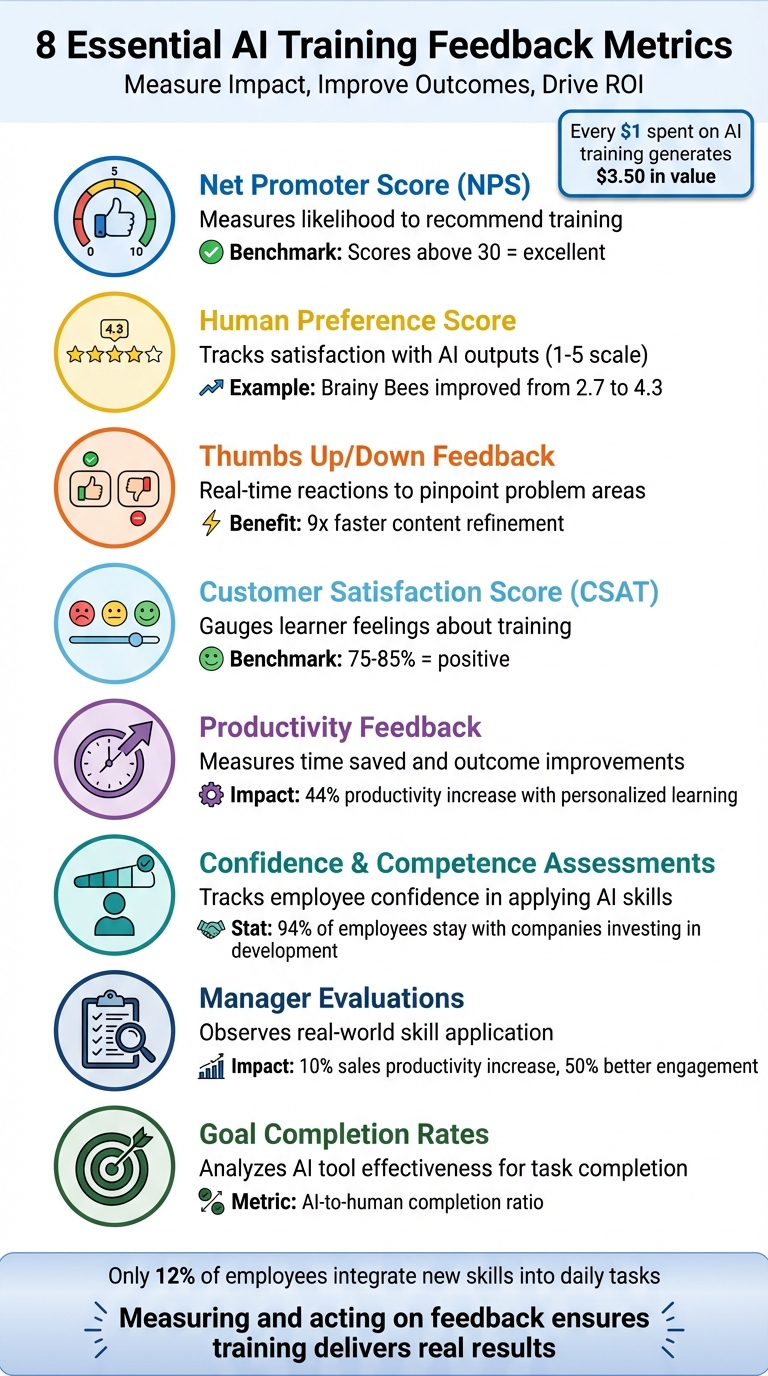

AI training works best when you can measure its impact. The article highlights eight metrics that help organizations track and improve training programs. These metrics reveal whether employees are learning, applying new skills, and improving productivity. Here's what you need to know:

- Net Promoter Score (NPS): Measures how likely participants are to recommend the training. Scores above 30 indicate success.

- Human Preference Score: Tracks satisfaction with AI outputs and identifies areas for improvement.

- Thumbs Up/Down Feedback: Simple, real-time reactions to training content, pinpointing problem areas.

- Customer Satisfaction Score (CSAT): Gauges how learners feel about the training, with scores above 75% being positive.

- Productivity Feedback: Measures whether employees are using AI to save time or improve outcomes.

- Confidence and Competence Assessments: Tracks how confident employees feel applying new AI skills.

- Manager Evaluations: Provides insights into how well employees apply training in their roles.

- Goal Completion Rates: Analyzes how effectively AI tools are used to complete tasks.

These metrics help organizations refine training programs, align them with business goals, and track ROI. For example, every $1 spent on AI training can generate $3.50 in value. The key takeaway? Measuring and acting on feedback ensures training delivers real results.

8 Essential AI Training Feedback Metrics for Measuring Success

1. Net Promoter Score (NPS)

Measuring Participant Satisfaction

The Net Promoter Score (NPS) boils down to one simple question: "How likely are you to recommend this training to a colleague?" Participants respond on a scale from 0 to 10. Here's how it breaks down: those scoring 9–10 are labeled as Promoters, 7–8 are Passives, and 0–6 are Detractors. To calculate the NPS, subtract the percentage of Detractors from the percentage of Promoters.

According to Erik van Vulpen from AIHR, scores between 0 and 30 indicate solid performance, scores above 30 are excellent, and anything below 0 signals major issues. This simple framework lets training managers quickly assess whether their AI training programs are hitting the mark or falling short.

Easy to Use and Track

Modern Learning Management Systems (LMS) simplify the process by automatically sending NPS surveys right after training sessions, which boosts response rates. The single-question design keeps things brief, while the familiar 0–10 scale ensures clarity. Plus, because NPS is widely recognized in the business world, executives can easily understand the results without needing extra explanation.

Given that only 13% of companies currently evaluate the ROI of their Learning and Development efforts, metrics like NPS provide a straightforward way to establish a baseline. Automated tools within LMS platforms also allow for real-time dashboards, offering insights into trends across departments or roles.

Improving AI Training Programs

Low NPS scores often point to outdated or irrelevant material - something that happens frequently in AI training, as tools and technologies evolve so quickly.

"A high NPS correlates with high engagement. It generally shows that content is relevant, concise, and immediately useful." - 360Learning

Interestingly, organizations that rely on AI-driven KPIs are 5x more likely to achieve better alignment across business functions. By pairing NPS with open-ended feedback, training teams can dig into specific frustrations and fine-tune their content for future sessions.

This focus on participant sentiment sets the stage for exploring other feedback metrics that can further enhance AI training programs.

2. Human Preference Score

Measuring Participant Satisfaction

Human preference scores offer a deeper look into training efficiency, complementing metrics like NPS. For example, P2RSS asks participants: "Did the AI output help you complete your task? Rate from 1 to 5." This simple question not only captures satisfaction but also helps fine-tune AI training processes to deliver better results.

Tracking Real-World Impact of Training

These scores provide instant feedback to assess if AI training actually leads to productivity improvements. In June 2025, Brainy Bees introduced P2RSS and saw their average satisfaction score climb from 2.7 to 4.3 after refining their prompt strategies.

"The goal isn't to just use AI - it's to use AI to speed things up or make things better." – Jessica Lau, Senior Content Specialist at Zapier

Similarly, Tyler Butler, founder of Collaboration For Good, monitored the "insight adoption rate", a measure of how often clients implemented AI-generated ESG insights into their strategies. This data helped the firm adjust how insights were presented, making them more actionable. Meanwhile, DHL advisor Viraj Lele noted that even small improvements in accuracy preferred by users led to a 12% increase in average order value and a 15% rise in repeat purchases.

Ease of Implementation and Aggregation

One of the biggest advantages of human preference scores is their simplicity. By embedding star ratings or thumbs up/down options directly into AI tools, organizations can gather instant feedback. These scores can be aggregated using methods like consensus voting or pass/fail thresholds combined with numerical ratings. Tracking both satisfaction scores and the number of prompt attempts required for a successful outcome highlights areas needing further training or tool refinement. This aggregated data plays a critical role in improving AI training content.

Relevance to Improving AI Training Programs

Human preference data provides direct insights for refining AI training programs. If feedback scores fall short of expectations, it often signals that the AI output lacks context or that users need better prompting techniques.

"Since we rely on AI to support content work, we need to measure how useful the output actually is and if it helps move the task or project forward." – Kinga Edwards, CEO, Brainy Bees

3. Thumbs Up/Thumbs Down Feedback

Ease of Implementation and Aggregation

Using thumbs up/down feedback is a simple yet effective way to gauge learner reactions. By embedding these buttons or real-time chatbots directly into training modules, you can collect immediate responses instead of relying on post-training surveys. This approach allows for faster, real-time data collection.

"Embed feedback tools directly within training for real-time insights." – Paul Leone, Ph.D, Founder and Principal Consultant, MeasureUp Consulting

AI-driven tools and natural language processing (NLP) take binary feedback a step further by analyzing trends and identifying frustrations. For example, comments tied to thumbs down ratings can reveal whether content feels overly technical, outdated, or unengaging. These automated systems summarize feedback efficiently, helping learning and development (L&D) teams refine content up to nine times faster than manual reviews. This streamlined process ensures feedback is quickly translated into actionable improvements.

Relevance to Improving AI Training Programs

Thumbs down ratings act as clear indicators of trouble spots within training programs. By placing feedback prompts at the end of each major module, rather than waiting until the course concludes, you can pinpoint where learners face challenges or lose interest. For instance, linking negative feedback to specific dropout points helps clarify if issues arise from overly complex explanations or lackluster content.

This detailed approach allows organizations to determine whether low ratings are caused by confusing material or technical issues. Such insights are particularly valuable, considering that 22% of employees view effective training as a form of recognition. By addressing these concerns, organizations can enhance both learning outcomes and employee retention.

4. Customer Satisfaction Score (CSAT)

Effectiveness in Measuring Participant Satisfaction

The Customer Satisfaction Score (CSAT) is a straightforward way to gauge the "Reaction" level of training programs. It reflects how learners feel about the training and how useful they find it. In AI training, this metric focuses on aspects like delivery quality, content relevance, and AI-assisted features.

The process is simple: surveys typically ask questions like, "How satisfied were you with this training?" Participants respond using a scale - commonly 1 to 5, 1 to 10, or a percentage. To calculate CSAT, divide the number of positive responses (e.g., ratings of 4 or 5 on a 5-point scale) by the total responses, then multiply by 100. Scores between 75% and 85% are considered positive, while industry averages range from 74 to 80. This quick and efficient method makes it easy to gather and analyze feedback.

Ease of Implementation and Aggregation

CSAT surveys are designed for speed and convenience, allowing organizations to gather immediate feedback and address issues right away. Surveys can be distributed through various channels, including email, SMS, in-app notifications, or website pop-ups, ensuring a diverse and representative set of responses.

Quick feedback is critical.

To capture the most accurate data, surveys should be issued right after the training session while the experience is still fresh. AI tools can help streamline this process by summarizing feedback and identifying common challenges within the program. However, to get the most meaningful insights, numerical scores should be paired with open-ended questions. These follow-ups can reveal details about difficulties, interest in the topics, or areas where the content might feel repetitive.

Relevance to Improving AI Training Programs

CSAT is more than just a satisfaction measure - it provides actionable insights that can directly enhance AI training programs. It helps quantify the effectiveness of training and its impact on learner performance. For example, high satisfaction scores often correlate with better engagement and personalized learning experiences, which can boost productivity by as much as 44%.

This metric is also useful for spotting "AI drift", where the effectiveness of training diminishes over time. A drop in satisfaction scores can act as an early warning sign. Despite its importance, only 35% of businesses currently track AI performance metrics, even though 80% list reliability as a major concern. Monitoring CSAT alongside other data, like completion rates and performance metrics, helps organizations move beyond vanity metrics to assess real business impact.

Retention is another area where CSAT proves valuable. Without regular reinforcement, learners can forget about 75% of new information within six days. This makes immediate feedback not just helpful but essential for improving retention and long-term learning outcomes.

5. Employee Feedback on Productivity Gains

Ability to Track Real-World Impact of Training

When it comes to measuring productivity gains, one key focus is learning transfer - essentially, whether employees are applying AI skills in their actual work beyond the training sessions. This goes beyond tracking completion rates and tackles the bigger question: Is AI genuinely making tasks faster or improving outcomes?

For instance, personalized AI-assisted learning has the potential to increase productivity by 44%. However, only 12% of employees currently integrate new skills from training into their daily tasks. This disconnect is a major reason why 92% of business leaders report seeing no measurable impact from their learning initiatives. Without consistent feedback on productivity, it becomes nearly impossible to differentiate between AI tools that truly enhance efficiency and those that fall short. Capturing these shifts in workplace performance highlights the tangible benefits of AI training.

Effectiveness in Measuring Participant Satisfaction

Much like metrics such as NPS or CSAT, gathering feedback on productivity helps refine AI training by focusing on how effectively skills are applied in real-world scenarios. A practical tool for this is the Prompt-to-Result Satisfaction Score (P2RSS), which asks employees to rate AI output on a 1-5 scale based on how quickly they achieved results and how many attempts were required. For example, in June 2025, Brainy Bees CEO Kinga Edwards implemented P2RSS to evaluate the efficiency of AI in content creation. Initially, the team scored an average of 2.7/5, signaling that too much time was being spent refining AI outputs. After improving prompting techniques based on this feedback, the score rose significantly to 4.3/5.

Another effective method involves pre- and post-training benchmarking. Employees complete a specific task before training to establish a baseline, then repeat the task afterward to measure improvements in time and efficiency. By multiplying the time saved by an employee’s hourly rate, organizations can calculate financial benefits. For example, reducing time-to-competency by just 30 days can generate an additional $12,000 in value per employee. These measurable outcomes provide a clear picture of the success of AI training programs.

Relevance to Improving AI Training Programs

Numbers alone don’t tell the full story - qualitative feedback is essential for identifying specific gaps in training. For example, Syed Balki, founder of WPBeginner, used a "Resolution Gap Index" to measure discrepancies between AI recommendations and actual issue resolutions. The findings revealed that while the AI was technically accurate, it lacked contextual understanding, such as accounting for customer tier. This insight led to enhancements in the AI’s memory and contextual capabilities.

Open-ended feedback can also uncover barriers like unclear instructions or insufficient practice opportunities. Combining employee self-assessments with manager observations - using structured rubrics - helps ensure that reported improvements translate into real-world behavior changes. These insights not only refine AI training but also ensure it aligns better with employees' needs and workplace realities.

sbb-itb-e5dd83f

6. Confidence and Competence Self-Assessments

Measuring Participant Satisfaction Effectively

Self-assessments are a useful way to evaluate how satisfied and empowered participants feel after completing AI training programs. These tools not only highlight current skill levels but also provide guidance on the next steps for improving proficiency. In the context of AI training, measuring confidence plays a key role in fostering psychological safety. It helps determine whether employees see AI as a valuable tool rather than a threat to their roles. Higher confidence levels are often linked to stronger engagement and greater job satisfaction. In fact, 94% of employees report they are more likely to stay with a company that invests in their career development. This focus on confidence complements other metrics like satisfaction and productivity, offering a fuller picture of training outcomes.

Tracking Real-World Impact of Training

Self-assessments go beyond gauging satisfaction by predicting how well employees apply their skills on the job. Confidence assessments act as an early indicator of skill gaps that could affect organizational performance. These tools also help measure learning transfer rates, which reflect the percentage of employees applying their new AI skills in the workplace - typically between 10% and 20%. By using surveys or journals, employees can share how they plan to implement their newly acquired skills, bridging the gap between training and day-to-day tasks. Additionally, organizations can calculate a "Relevance Score" to evaluate how closely the training content aligns with employees' actual responsibilities.

Simplicity in Implementation and Analysis

Self-assessments are easy to implement and cost-effective. Questionnaires and evaluation forms are inexpensive and can be quickly analyzed using statistical tools. When integrated with a Learning Management System (LMS), data collection becomes automated, with instant calculations of averages and visualizations of response trends. Using consistent rating scales - like 1–5 or 1–10 - for pre- and post-training assessments makes it simpler to track perceived improvements in competence. Another useful technique is the retrospective pre/post assessment, where learners evaluate their knowledge both before and after the course to measure confidence changes more accurately.

Enhancing AI Training Programs

Tailored questions can provide deeper insights into training effectiveness. For example, asking "How confident would you be teaching your acquired knowledge and skills to someone else?" (AIHR) helps gauge mastery and readiness for peer-to-peer knowledge sharing. Including "select all that apply" questions can uncover obstacles - like insufficient resources, time constraints, or lack of supervisor support - that might hinder employees from applying their new AI skills. Combining subjective confidence questions with objective knowledge tests ensures that self-reported competence aligns with actual proficiency. This is especially important considering that 59% of leaders express limited or no confidence in their executive teams' Generative AI skills.

AI Evaluation from First Principles: You Can't Manage What You Can't Measure

7. Manager Evaluation Scores

Manager evaluations provide a practical lens to assess how effectively employees are applying their AI training in real-world scenarios. These insights build on employee feedback and performance metrics, offering a well-rounded view of training outcomes.

Ability to Track Real-World Impact of Training

Managers play a key role in Level 3 evaluations, focusing on how AI skills are being applied on the job. Unlike automated systems that simply track course completion, managers observe real-time behavioral changes as teams incorporate AI tools. This allows them to capture productivity improvements and proactive initiatives that automated systems might overlook. Another critical metric is "time to proficiency", which measures how quickly employees become adept at using AI tools. Reducing this time can lead to significant cost savings.

Effectiveness in Measuring Participant Satisfaction

Manager evaluations go beyond self-reported surveys by validating training outcomes through tangible improvements. For instance, AI adoption has been shown to increase sales productivity by up to 10% and enhance customer engagement by as much as 50%. Managers assess these gains by comparing metrics like individual sales performance or first-call resolution rates before and after training. They also identify obstacles - such as lack of resources, time constraints, or insufficient technical support - that may hinder employees from fully applying their new skills. As Peter Drucker aptly said:

"If you can't measure it, you can't improve it".

Ease of Implementation and Aggregation

Structured rubrics help ensure that manager evaluations are consistent and objective. When paired with HRIS and performance management tools, these assessments contribute to a thorough performance analysis. AI-powered analytics further streamline the process, automating feedback collection and reporting across large teams. Regular review meetings between managers and Learning & Development teams enable quick adjustments to training programs based on observed gaps. These evaluations also highlight top performers who can take on mentorship roles, fostering peer learning.

Relevance to Improving AI Training Programs

Manager insights are instrumental in refining AI training programs to better align with business goals. By connecting training efforts to broader organizational objectives, these evaluations help distinguish effective strategies from less impactful ones. One method involves calculating a "Relevance Score" to ensure training content remains aligned with employees' daily tasks and the company’s evolving needs. Dmitri Adler, Co-Founder of Data Society, emphasizes this point:

"The return on investment for data and AI training programs is ultimately measured via productivity. You typically need a full year of data to determine effectiveness, and the real ROI can be measured over 12 to 24 months".

8. Goal Completion Rate Feedback

Tracking the Impact of Training in the Workplace

Goal completion rates are a solid way to measure whether AI training is making a difference in real-world tasks. By comparing the number of tasks AI completes independently to those requiring human help, the AI-to-human completion ratio paints a clear picture of how effectively employees are using AI tools. A higher ratio means AI tools are being utilized more efficiently.

For example, in June 2025, Taskade CEO John Xie used this metric to pinpoint where AI agents needed human intervention. By analyzing these gaps, his team improved agent memory and fine-tuned system prompts, boosting the rate of tasks completed autonomously.

Streamlining Data Collection and Insights

Beyond completion rates, the ease of collecting and analyzing this data plays a big role in generating actionable insights. Modern Learning Management Systems (LMS) can automatically track completion data and highlight where learners struggle or drop off. Pairing this with metrics like "time to proficiency" adds another layer of understanding - showing not just who completes training but how quickly they become competent. Another useful tool is the "Prompt-to-Result Satisfaction Score", which measures how many attempts it takes to produce high-quality AI outputs.

At Brainy Bees, CEO Kinga Edwards tracked this score in June 2025 and found an initial team average of 2.7 out of 5. After improving their AI prompting training, the score jumped to 4.3 out of 5, cutting down time spent on manual edits significantly. Linking learner IDs from pre-assessments to post-training performance ensures these insights remain accurate and actionable.

Enhancing AI Training Programs

Metrics like these don’t just measure progress - they also reveal areas for improvement, turning training data into better business strategies. One such metric, the Resolution Gap Index (RGI), measures the gap between AI recommendations and actual resolutions, identifying where training needs to emphasize context.

For instance, in June 2025, WPBeginner founder Syed Balki discovered that while their AI provided technically correct answers, it often lacked context. By focusing their training on contextual understanding, the company significantly reduced their RGI and elevated customer service outcomes.

"The goal isn't to just use AI - it's to use AI to speed things up or make things better".

Jessica Lau, Senior Content Specialist at Zapier, emphasizes this point. Without regular use, participants forget about 75% of new information within six days. Goal completion metrics help organizations spot training gaps early, allowing them to refine content before key skills are lost.

Conclusion

Feedback metrics turn AI training into a powerful tool for workforce development. By monitoring data like Net Promoter Scores and goal completion rates, organizations can pinpoint skill gaps, maximize training ROI, and clearly demonstrate the business impact of AI adoption. As Peter Drucker once said:

"If you can't measure it, you can't improve it".

These metrics create a clear, actionable path for ongoing improvement. With every dollar spent on AI systems reportedly yielding an average return of $3.50 and personalized learning pathways enhancing productivity by 44%, the financial benefits are undeniable. For instance, a $20 billion company could see an additional $500 million to $1 billion in profits within just 18 months. Achieving these results, however, requires consistent measurement and refinement.

NWA AI - Northwest Arkansas AI Innovation Hub (https://nwaai.org) follows this same philosophy in its regional training programs. By tracking metrics like learner satisfaction, skill development, and productivity improvements, they ensure that participants in Northwest Arkansas don’t just gain knowledge about AI - they actively use it to improve workflows and drive innovation. Their hands-on approach to AI literacy, tool application, and organizational integration mirrors the practices that help businesses across the country justify their training investments and accelerate workforce growth.

The key takeaway is balancing leading indicators like participation rates and engagement levels with lagging metrics such as revenue growth and employee retention. This dual focus allows organizations to identify early signs of training inefficiencies while also capturing the long-term outcomes that matter most to stakeholders.

Whether you're analyzing the AI-to-human task completion ratio, evaluating prompt-to-result satisfaction, or reviewing the Resolution Gap Index, these metrics act as a guide for continuous improvement. They help distinguish between training programs that truly add value and those that waste resources. In a world where 62% of C-suite executives cite talent shortages as the biggest obstacle to scaling AI, effective measurement isn’t just helpful - it’s essential to successful AI implementation.

FAQs

How can businesses align their AI training programs with their goals?

To make sure AI training programs align with business goals, the first step is to define what success looks like. Are you aiming for better productivity, increased sales, or fewer errors? Once you’ve nailed down these outcomes, work with key stakeholders to turn them into measurable objectives tied to specific key performance indicators (KPIs). For example, you might track metrics like training cost per employee, learning-transfer rates, or improvements in employee performance. This clarity helps calculate ROI - for instance, generating $3.50 in value for every $1 spent.

Tracking progress doesn’t stop there. It’s critical to measure and gather feedback continuously. Keep an eye on metrics like course completion rates, post-training performance, and how well employees apply new skills on the job. This data highlights areas for improvement and ensures the program stays on track. Tools powered by AI, such as adaptive learning systems, can further personalize training, helping employees gain the exact skills required to meet business goals. Companies like NWA AI offer programs designed to connect training with measurable outcomes, helping businesses in Northwest Arkansas see real, actionable results from their AI investments.

How does using metrics like Net Promoter Score (NPS) benefit AI training programs?

Using the Net Promoter Score (NPS) is a simple yet effective method to gauge how satisfied learners are and how likely they are to recommend the training. It takes subjective feedback and turns it into clear, actionable data, giving a solid measure of the program's success.

By monitoring NPS, organizations can pinpoint what’s working well, tackle areas that need improvement, and ensure the AI training aligns with participant expectations. This approach helps boost engagement and leads to better overall outcomes.

What are human preference scores, and how do they improve AI training?

Human preference scores are essentially ratings or rankings provided by actual users to assess AI-generated outputs. These scores serve as a direct, people-focused signal, guiding AI models to understand what users perceive as "good" or valuable. By integrating these scores into the training process, developers can adjust the model to better meet user expectations, align with societal norms, and address specific business goals.

This feedback loop - commonly implemented through techniques like reinforcement learning from human feedback (RLHF) - helps address errors that traditional statistical methods might miss. The result? Greater accuracy, reduced bias, and more dependable outcomes across a variety of tasks. For learners in Northwest Arkansas, NWA AI incorporates these preference-driven methods into its training programs, equipping participants with the skills to gather and use feedback effectively to create AI systems that make a meaningful impact.

Ready to Transform Your Business with AI?

Join our AI training programs and help Northwest Arkansas lead in the AI revolution.

Get Started TodayRelated Articles

ROI and KPIs in AI Process Optimization

Measure AI impact with ROI and KPIs: set baselines, track hard and soft ROI, and monitor model, system, and business KPIs to validate performance and value.

How Blended Learning Improves AI Upskilling

Blended learning—online modules plus hands-on workshops—boosts AI skill retention, engagement, and real-world application for faster workplace upskilling.

5 Steps to Define AI Workflow Goals

Set measurable AI workflow goals in five steps: map processes, set SMART targets, pinpoint AI opportunities, define KPIs, and align with strategy.